Did you know you can extract data from almost any website using a technique called web scraping? It's true—web scraping lets you automatically gather content like articles, headlines, publication dates, and more from the internet using scripts or code. In this tutorial, you'll learn how to scrape data like article titles, links, and images from a news site such as Kompas.com. Remember, this guide is strictly for educational purposes - not for abusing or violating any site’s rules.

Why Should You Care About Web Scraping?

Web scraping has tons of benefits, especially for developers, data researchers, and digital marketers. With web scraping, you can automate data collection instead of copying items one by one. Here are some key advantages:

- Quickly Gather News Data

You can grab the latest articles from sites like Kompas.com for analysis. This is helpful for tracking tech trends or understanding hot topics.

- Analyze Market and Competition

Businesses use scraping to monitor product prices, competitor strategies, and stay on top of market trends.

- Build Datasets for Machine Learning

Scrape news articles to create datasets for natural language processing or sentiment analysis projects.

- Automate Information Searching

Instead of hunting for info manually, scraping lets you gather details like schedules, prices, or reviews automatically.

- Save Loads of Time

Scraping can turn hours of tedious copy-paste work into just minutes of automated processing.

Is Web Scraping Allowed?

The short answer: yes, but with important caveats. Follow these best practices to keep your scraping legal and ethical:

- Check robots.txt

Every website has a robots.txt file that outlines what bots can or can't access. Only scrape parts of the site that are permitted.

-

Respect the Terms of Service (ToS) Some sites specifically forbid data extraction by bots. Always read and understand each site's ToS before scraping.

-

Use Data for Education or Research It's best to use scraped data for research, experimentation, or non-commercial projects. If you use it for business without permission, you risk legal trouble.

-

Protect Target Servers Don’t flood servers with rapid-fire requests. Add delays between requests to avoid being mistaken for an attack and to keep things respectful.

Tools and Libraries You'll Need

If you want to scrape data with Python, you’ll need:

- Requests (for HTTP requests)

- BeautifulSoup (bs4) (for HTML parsing)

- SQLite3 (for lightweight database storage; built-in with Python)

Installing Libraries

Before coding, install the necessary packages:

pip install requests beautifulsoup4

Note: sqlite3 comes built-in with Python.

Implementation: Step-by-Step Guide

First, create a main.py file in your project's root folder. Let's walk through each step and explain what’s happening.

- Import the Libraries

import requests

from bs4 import BeautifulSoup

from urllib.parse import urljoin

import sqlite3

- Set a User-Agent Header

HEADERS = {

"User-Agent": "Mozilla/5.0"

}

Adding headers makes your requests look like they're coming from a real browser, which reduces the chance of getting blocked.

- Helper to Grab Image URLs

def extract_img_url(img, base_url):

for attr in ("data-src", "data-original", "data-lazy", "data-url"):

val = img.get(attr)

if val:

return urljoin(base_url, val)

srcset = img.get("srcset")

if srcset:

first = srcset.split(",")[0].strip().split()[0]

return urljoin(base_url, first)

src = img.get("src")

if src:

return urljoin(base_url, src)

return None

Many sites use lazy-loading for images, so URLs might not be in the typical src attribute. This helper searches for the right attribute to grab the real image.

- Fallback for og:image Metadata

def extract_og_image(article_url):

try:

r = requests.get(article_url, headers=HEADERS, timeout=15)

s = BeautifulSoup(r.text, "html.parser")

meta = s.find("meta", property="og:image") or s.find("meta", attrs={"name": "og:image"})

if meta and meta.get("content"):

return meta["content"]

except Exception:

return None

return None

If no image is found, this function grabs the main thumbnail from the page's metadata.

- Establish an SQLite Connection

conn = sqlite3.connect("kompas.db")

cursor = conn.cursor()

cursor.execute("""

CREATE TABLE IF NOT EXISTS kompas_news (

id INTEGER PRIMARY KEY AUTOINCREMENT,

title TEXT,

link TEXT UNIQUE,

image TEXT

)

""")

conn.commit()

This creates a simple database and a table to store scraped results.

- Scrape Articles from the Homepage

base = "https://tekno.kompas.com/"

resp = requests.get(base, headers=HEADERS, timeout=20)

soup = BeautifulSoup(resp.text, "html.parser")

articles = soup.find_all("div", class_="article__grid")

This part downloads the homepage and parses it for article containers.

- Extract and Save Data to the Database

for article in articles:

title_tag = article.find("h3", class_="article__title")

title = title_tag.get_text(strip=True) if title_tag else None

link_tag = title_tag.find("a") if title_tag else None

link = link_tag["href"] if link_tag and link_tag.has_attr("href") else None

img_tag = article.select_one("div.article__asset img") or article.find("img")

image = extract_img_url(img_tag, base) if img_tag else None

# Fallback: get og:image if no image found

if not image and link:

image = extract_og_image(link)

if title and link:

cursor.execute(

"INSERT OR IGNORE INTO kompas_news (title, link, image) VALUES (?, ?, ?)",

(title, link, image)

)

conn.commit()

print("Data has been saved to kompas.db")

Here's what's happening:

- The script looks for the article title and link inside specific tags.

- For the image, it first tries the main container and falls back to metadata if the image is missing.

- It saves each unique article to the database and avoids duplicates.

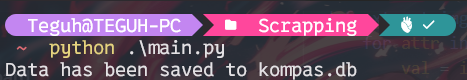

- Check Your Results

Run your script with:

python main.py

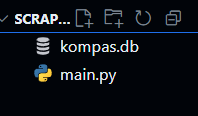

If everything works, you’ll see a success message. Then check your project root and make sure you see a new kompas.db file.

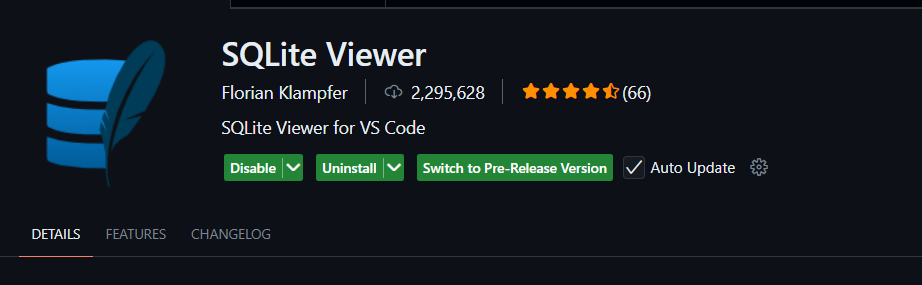

To easily view the database in VS Code, install the SQLite Viewer extension.

To easily view the database in VS Code, install the SQLite Viewer extension.

Wrapping Up

Python web scraping with requests and BeautifulSoup makes extracting news data from kompas.com a breeze. Use your scraped data for analyzing news trends, digging into text mining, or even feeding a machine learning model. Just remember to always respect the site's policies.

Thanks for following along!